We were given the task to stream the FIFA 14 World Cup and I think this was an experience worth sharing. This is a quick overview about: the architecture, the components, the pain, the learning, the open source and etc.

We were given the task to stream the FIFA 14 World Cup and I think this was an experience worth sharing. This is a quick overview about: the architecture, the components, the pain, the learning, the open source and etc.

The numbers

- GER 7×1 BRA (yeah, we’re not proud of it)

- 0.5M simultaneous users @ a single game – ARG x SUI

- 580Gbps @ a single game – ARG x SUI

- =~ 1600 watched years @ the whole event

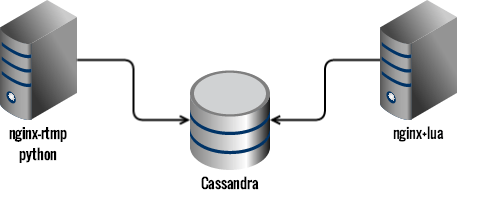

The core overview

The project was to receive an input stream, generate HLS output stream for hundreds of thousands and to provide a great experience for final users:

- Fetch the RTMP input stream

- Generate HLS and send it to Cassandra

- Fetch binary and meta data from Cassandra and rebuild the HLS playlists with Nginx+lua

- Serve and cache the live content in a scalable way

- Design and implement the player

If you want to understand why we chose HLS check this presentation only in pt-BR. tip: sometimes we need to rebuild some things from scratch.

The input

The live stream comes to our servers as RTMP and we were using EvoStream (now we’re moving to nginx-rtmp) to receive this input and to generate HLS output to a known folder. Then we have some python daemons, running at the same machine, watching this known folder and parsing the m3u8 and posting the data to Cassandra.

To watch files modification and to be notified by these events, we first tried watchdog but for some reason we weren’t able to make it work as fast as we expected and we changed to pyinotify.

Another challenge we had to overcome was to make the python program scale to x cpu cores, we ended up by creating multiple Python processes and using async execution.

tip: maybe the best language / tool is in another castle.

The storage

We previously were using Redis to store the live stream data but we thought Cassandra was needed to offer DVR functionality easily (although we still uses Redis a lot). Cassandra response time was increasing with load to a certain point where clients started to timeout and the video playback completely stopped.

We were using it as Queue-like which turns out to be a anti-pattern. We then denormalized our data and also changed to LeveledCompactionStrategy as well as we set durable_writes to false, since we could treat our live stream as ephemeral data.

Finally, but most importantly, since we knew the maximum size a playlist could have, we could specify the start column (filtering with id > minTimeuuid(now – playlist_duration)). This really mitigated the effect of tombstones for reads. After these changes, we were able to achieve a latency in the order of 10ms for our 99% percentile.

tip: limit your queries + denormalize your data + send instrumentation data to graphite + use SSD.

The output

With all the data and meta-data we could build the HLS manifest and serve the video chunks. The only thing we were struggling was that we didn’t want to add an extra server to fetch and build the manifests.

Since we already had invested a lot of effort into Nginx+Lua, we thought it could be possible to use lua to fetch and build the manifest. It was a matter of building a lua driver for Cassandra and use it. One good thing about this approach (rebuilding the manifest) was that in the end we realized that we were almost ready to serve DASH.

tip: test your lua scripts + check the lua global vars + double check your caching config

The player

In order to provide a better experience, we chose to build Clappr, an extensible open-source HTML5 video player. With Clappr – and a few custom extensions like PiP (Picture In Picture) and Multi-angle replays – we were able to deliver a great experience to our users.

tip: open source it from day 0 + follow to flow issue -> commit FIX#123

The sauron

To keep an eye over all these system, we built a monitoring dashboard using mostly open source projects like: logstash, elastic search, graphite, graphana, kibana, seyren, angular, mongo, redis, rails and many others.

tip: use SSD for graphite and elasticsearch

The bonus round

Although we didn’t open sourced the entire solution, you can check most of them:

- Python HLS manifest parser / utility

- Pure lua Cassandra client driver

- Clappr

- Live thumbnail

- Audio only from HLS nginx module

- Python lock (redis)

- P2P and HTTP live streaming

Interesting 🙂 We gave a talk about the same issue last year in Velocity 🙂 We had the same issues for our Platform though we are using Mediaroom and Microsoft technologies.

You can check the slides in the following link:

http://www.slideshare.net/almudenavivanco/velocity2014-gvp

Sadly the video taken in velocity is not free 😦 But it is hosted with last years Barcelona Velocity Talks.

What we feared the most was a final Brazil – Spain 😉

[…] http://leandromoreira.com.br/2015/04/26/fifa-2014-world-cup-live-stream-architecture/ […]

[…] is a concise post on how the live video streaming architecture was built for last year’s biggest sporting […]

What kind of latencies did you observe between broadcast and playback? How did you deal with users with slow connections? Did you playback from the latest frames or did you catch up?

We didn’t measure this latency (twitch.tv does that in such a great way), what I think we could do is: (not rock science):

Sum:

+ ingest point (rtmp input) buffer

+ network latency between the stream generator and our server

+ delay introduced by hls generator

+ delay response of cassandra

+ latency between final users and our edge servers

———-

This latency from RAW input until final users. (roughly speaking we had like 7-11s delay from realtime)

Slow connections: we leave this problem to hls adaptive algorithm (the best case scenario you will only consume the lower bitrate).

“Did you playback from the latest frames or did you catch up?”

I might not understood you but, we also rely on the way hls playback works (when you switch video quality it tries to find a keyframe, when you start a video it see what kind of video is and might start playing with latest chunks, or considering the media sequence…)

[…] FIFA 2014 World Cup live stream architecture | Leandro Moreira – […]

Indeed it’s a nice post.

I just have one single question, which I’ll never understand: why you decided to rely so much on adaptive stream (DASH)?!?! I’m asking this because you guys did not even provide the option to manually set the video quality on the player…. Sometimes, users do not have only a bandwidth bottleneck problem (which is partially tackled by DASH), but also they can have CPU or RAM problems, that can bring a lot of stalling during the playback…….

I would suggest that you bring it back the manual selection for video quality on the Globo player, since, e.g., Premiere FC is sometimes very very hard to watch — I don’t want to always watch it in HD quality having multiple stalling during the transmission. I prefer to watch it in a lower quality but without any problems. 🙂

That’s my two cents.

Cheers!

You’re right, adaptation algorithm for adaptive streaming over HTTP is not perfect. btw, we use HLS not DASH(yet).

A great paper about it can be found at http://www.tkn.tu-berlin.de/fileadmin/fg112/Papers/Adaptation_Algorithm_for_Adaptive_Streaming_over_HTTP.pdf

not really

Great article, Leandro! Congratulations. It’s great to see a few friend’s faces on HN front page 🙂

Thank you very much, Ryu!

[…] esta entrada de Leandro Moreira, nos explica de forma muy resumida algunos de los problemas con los que tuvieron […]

The dashboard thing. do you opensource that ? will you ?

We maybe do a post about this dashboard :D!

But the thing is: *it is just an aggregation and presentation* over the available data source (mostly graphite)

Concerning “tip: maybe the best language / tool is in another castle.” – what would that be? 🙂

That’s a puzzling question, since my answer can represent only my own preferences 😛 anyway, I think maybe Go, Clojure or any language more driven to “multi-task”, although our python solution is handling pretty well.

Maybe Elixir 🙂

There is a nice use case presented by Doug Rohrer with a project that deals with thousands of connections.

Check the presentation here: https://youtu.be/a-OCorBXX7M

Anyway, congratulations for the great project and article!

[…] w sumie obejrzeli 1600 lat materiałów wideo w trakcie trwania całych Mistrzostw. Zapraszamy na bloga Leandro […]

The sauron is not open source?

Not it’s not! we may do a post about this dashboard :D!

But the thing is: *it is just an aggregation and presentation* over the available data source (mostly graphite)

I would love if you make an post about it.

[…] • Потоковое вещание на ЧМ 2014 по футболу: архитектура. […]

[…] L’architecture technologique du streaming de la coupe du monde FIFA 2014. […]

Great post! Thanks for sharing 🙂

Nice post. What’s the browser support for the video player?

We tried the most used browsers and its latest version https://github.com/clappr/clappr#supported-formats

[…] How do you live stream the World Cup – Link […]

[…] ovaj post kako su koristeći nginx streamovali fudbalsku utakmicu Brazil-Nemacka. 500,000 […]

[…] A Look At The FIFA 2014 World Cup’s Live Streaming Architecture […]

I experiencing the creation of a video server and I would like to know what was the ts segment size you used in 2014 world cup live videos. I also would like to know the value of the initial buffer size and general buffer size. They are dynamically controled by clappr player or are the fixed?

Thank you.

Hei Torino, we were able to change the chunk size based on our predictions and desires but we generally went with 2s. About the buffer size for encoder and players though I can’t remember.

This buffer size is interesting, since the HLS default segment size is (or were) 10s. Looks like in real life things are diferent.

Thank you for your answer.